Each week we find a new topic for our readers to learn about in our AI Education column.

What if one-size-fits-all really meant that on size of something could potentially fit each of the 8 billion-plus adults and school-age children scampering around the surface of Earth?

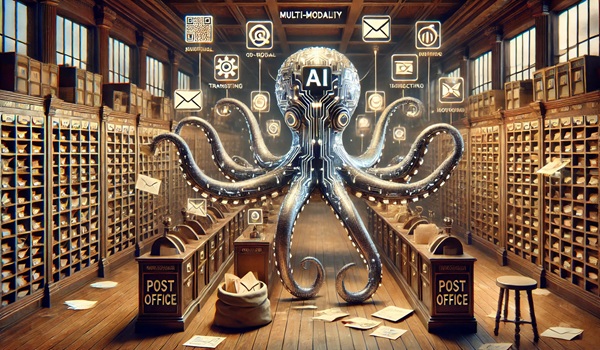

Welcome to another AI Education, where this week we’re going to take a look at one of the real benefits of artificial intelligence when compared to previous iterations of software technology—multi-modality. This time around we’re going to zoom out and discuss multi-modality in general and some of the areas where it intersects artificial intelligence.

A modality, for our purposes, describes how something happens—the way a user or participant, be it a human at a computer or the software within that computer, encounters data, and the form that software presents its output in.

What Is Multi-Modality?

Put simply, multi-modality is the ability to handle, digest or generate different forms and formats of materials. So, in transportation, multi-modality refers to the use of different types of transport—the movement of an object or package or container from ship to train to truck, for example. In education, multi-modality takes on a definition more relevant to our technology, namely, the delivery of information and knowledge in different formats, from texts and workbooks students read and write in at home, to video and audio played in a physical or digital classroom, to real-life, tangible experiences like dissections or other laboratory work.

Multimodality is related to but not synonymous with inter-modality. Inter-modality refers to the ability to move between different modalities—so in transportation, it would describe a facility that moves cargo between boats, airplanes, trains, trucks and other transportation modalities. In education, inter-modality would be asking a student who was instructed by reading a text to create an image or a song using the information they learned.

What Is AI Multimodality?

When it comes to AI, we can first think of multimodality coming from three different directions. First, it’s multi-modal learning, which refers to the kind of data we train our AI on—large language models can be trained solely on huge blocks of text, but the technology underlying these models can also be trained with data sets consisting of numbers and tables, speech, video, photography and art, among other modalities. AI capable of multi-modal learning is capable of processing and then integrating all of these different kinds of data, greatly expanding the potential size of the data sets used to train artificial intelligence models.

Second, multimodality describes how we, the users, interact with AI—I think many of us are accustomed to interacting with chatbots via typed text, and, thanks to automated call centers and smart speakers, most of us have probably interacted with AI via speech as well. But there are other methods for querying AI, including visual inputs like movement or video, that have implications for software’s user interface, enabling greater accessibility and ease of use.

Finally, multimodality can also refer to the output of an artificial intelligence. While, to this point, may of us have interacted with AIs that offer us either/or outputs—one model generates great text content, while another may create our podcasts and still another our pictures and images, truly multi-modal models can generate a combination of some or all of the above, and more. A multi-modal model could not only write a nice-looking bit of HTML for a website, but also the content text and images for each of our pages.

In AI, intermodality describes a model’s ability to move from one kind of input to a different type of output—speech-to-text, or text-to-image, for example. Traditional AI models usually operated using a single type of data, and offering a single type of output. We can refer to these types of models as “unimodal.” Open AI’s ChatGPT was initially launched as a unimodal model, a text-to-text chatbot.

Examples and Applications of AI Multimodality

Open AI didn’t wait long before moving towards multi-modality for its GPT models. The DALL-E image generation models launched as a good example of multi-modality, as one could use text or images to create new images. I often use DALL-E to come up with images for this AI Education column, prompting the model with text input, from which DALL-E delivers an image, but I could just as easily give DALL-E other images to use as examples upon which to generate a new image. With later versions of its GPT models, Open AI added multi-modality into ChatGPT.

Google’s Gemini, on the other hand, launched as a multi-modal model, with the ability to be prompted with images, text, audio, video and code. Multi-modal models like Gemini and GPT-4o are able to think and interact more like people, and are capable of being adapted to a larger variety of different tasks than their unimodal predecessors.

Under the hood, multi-modality in AI works similarly to a standard large language model does with text, the machine learning architecture is merely adapted to analyze and manipuale different types of data.

Multi-modality and inter-modality greatly expand AI’s toolbox, allowing models to retrieve data across different modalities, and, as we’ve mentioned, generate content across them as well. It makes possible more human-like robots that can interact with humans using speech, touch and visual cues. It potentially allows AI to recognize and adapt to human emotions and identify cues to health and well-being.

Why Multi-Modality Matters

The human organism is a multi-modal organism—we’re all generally using all of the senses available to us, whether we want to or not. Consider the ways in which we communicate—we speak, we gesture, we make phone calls, we send messages, we make facial expressions, we posture our bodies and change our tones of voice. Even our messages may use different modalities, rather than just plain text, we might send images, short videos and gifs, and emojis to ad emphasis or nuance to our messages. Our marvelous brains automatically use all of these seemingly disparate inputs and synthesize them into a coherent message, usually without requiring much conscious effort on our part.

If we want AI to be more human, to be as capable as a human (meaning artificial general intelligence, or AGI) or to exceed human capabilities (meaning artificial superintelligence), then creating truly multi-modal artificial intelligence is a necessary prerequisite to achieving that end. It’s not just about teaching AI to see like a human being through computer vision or hear like a human being through speech recognition, it’s about giving artificial intelligence the ability to fuse all of these modalities into a coherent message, consistently finding the intended meaning in the information, and to distinguish the intended signals in the message from the unintended noise.

Multi-modality is how we get to not just artificial general or super intelligence, but how we make AI accessible and usable for all people regardless of ability or education level. Multi-modality will enable a “one-size-fits-all” AI.