Each week we find a new topic for our readers to learn about in our AI Education column.

Generative AI is capable of finding a needle in a haystack of data.

But what if AI was capable of deconstructing the haystack and rebuilding it, straw by straw, with the needle in the same place, every time?

As it turns out, there’s a kind of AI built to do, cognitively, the rough equivalent of deconstructing and rebuilding the haystack, and it’s revolutionized what generative AI is capable of: A diffusion model.

Diffusion models have their genesis in particle physics, where they were used to simulate how particles disperse over time. Most of the generative AI that we’ve discussed in detail does not use the diffusion technique for training or inference, rather, the model is continually and repeatedly asked to make predictions and then check its own work for accuracy, becoming more accurate and reliable over time.

This is the method that the public-facing AI chatbots, powered by large language models, are using to learn how to understand inputs in natural language, retrieve the correct data, and produce outputs in the desired format.

While it is great at talking to people and retrieving data, this type of artificial intelligence model struggles when it is asked to synthesize something new or original. Output tends either to become repetitive and predictable over time, in some cases, or to be of poor quality in others. Diffusion models seem to solve both of these issues, capable of delivering diverse and varied as well as detailed and high quality results.

What Is A Diffusion Model, Then?

A diffusion model works differently. Rather than making predictions within a set of data in order to “learn” it, a diffusion model adds noise to the data—eventually enough noise to obliterate any pattern or meaning that can be discerned from the data whatsoever. The model then learns that process in reverse, eliminating noise to reconstruct the original data distribution. The mathematical formulas for adding and removing noise from data are extremely complex and convoluted—it is sufficient to say that they follow patterns that the software can latch onto and understand. There are also different types of diffusion, with different levels of utility for different purposes, but they all follow the same basic concept—start with data, add noise until it is meaningless, and then, crucially, reverse the process.

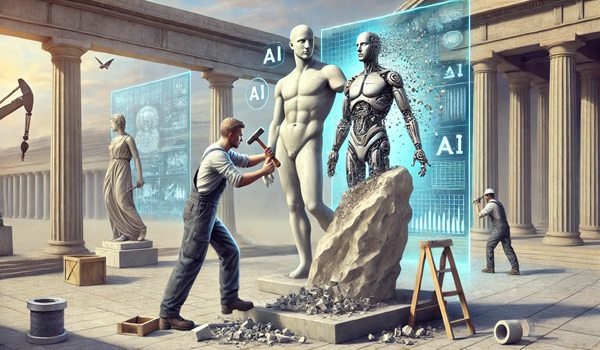

This reversal is the step where actual machine learning takes place. Think of it as Michaelangelo removing chunks of marble, bit by bit, until he creates David… but in software and data form. When software learns to do this with real images, it is ready to take the next step: creation.

The magic, then, is that a diffusion model can create something new that resembles its original data by creating a random sample of pure noise, and then denoising it as it did its training material. The drawback is that the level of detail and originality of diffusion models usually comes at a high cost in computing power and energy consumption, and that most such models take a relatively long time to complete their tasks—if you don’t believe us, compare how quickly OpenAI’s ChatGPT interfaces answer your text-based questions to how long it takes DALL-E to generate an image based on a simple prompt. Text moves much, much faster.

Why Are Diffusion Models Useful?

Diffusion models are great at creating non-text media, in particular, images. A diffusion model can be trained on images—it takes training images, diffuses them with noise, which in this case means random pixels, until they are obliterated, and then learns the process in reverse. Then, having been trained on huge databases of images, the model is ready to carve out new images from noise that it generates at random.

In other words, diffusion models are powerhouse image generators. Many of the public-facing image generators offered by hyperscalers like OpenAI (DALL-E) are in reality a synthesis of a diffusion model and a large language model (LLM).

Image generators, as we know them, combine diffusion models and LLMs. The LLM is used to translate user prompts into language that the diffusion model can understand. The diffusion model then generates an image based on the LLM’s translation of that prompt—the LLM’s output is used as a guide for the diffusion process, which spits out a freshly generated image. The LLM then usually delivers the product of the diffusion model to the user, offering them opportunities to edit the image using natural language prompts rather than offline image manipulation tools.

What Else Can They Do

New applications for diffusion models—and compound AI that blends diffusion models with other types of AI—are being carefully studied, but it appears that the diffusion technique is especially effective for visual and spacial applications. Thus, applications are being explored in medical diagnostics, including analysis of medical imaging, and in drug discovery and materials science. Applications are also being found in the telecommunications space, and in finance, where diffusion models are being employed for some types of financial modeling.

However, whenever AI is asked to be creative, diffusion models seem to have a role to play. Their utility is also being tested in the audio and video generation spaces not only to generate sound and video, but also to clean up and restore old or damaged recordings. It’s likely that the surviving members of The Beatles and their producers used a diffusion model to clean up an old recording of John Lennon’s voice to produce what has been hailed as their very last song, “Now and Then.”

On the darker side of things, diffusion models are, in part, helping to power the ongoing surge in deepfake technology and fraudulent image, video and audio files proliferating across the internet and social media.

We’ve already offered up OpenAI’s DALL-E as one example of a diffusion model wedded to a large language model. Other large technology providers have their own offerings, like Google’s Imagen and Gemini Diffusion. Another good example of a diffusion model is Stable Diffusion, often considered best-in-class, which can be used to generate powerful, realistic visual images.